What is really Lost in Translation?

Maybe it’s the ignorance. EVERYBODY’s ignorance.

Vincenzo Latronico, an italian translator (of literary, not technical works, remember this), just shared his thoughts about what the recent advances in Artificial Intelligence (AI) really mean. There are at least two points in his great post that deserve as much exposure as they can.

The first is Latronico’s report of his casual meeting, two years ago at a party, with a few Machine Learning and Artificial Intelligence (AI) researchers. When he told them what he does for a living, they were really embarrassed and puzzled, as if they had just met some really weird animal: “Wow,” one of them said, “I didn’t think translation was still something that humans do.”

Latronico gentlemanly labels that answer as just a “completely understandable and moreover symmetrical” ignorance, by those researchers, of what translators really are and do.

Maybe it’s something more than that, and not even symmetrical. I just bought a chinese power drill, and am happy with it because it has just the price/performance ratio that I actually needed. However, if I hadn’t been already quite familiar with how power drills work, I would have never learned it without help, just by the horrible, maybe even dangerous automatically translated manual that comes with that drill. And that is a power drill with two or three buttons, not a fighter jet.

I don’t know if automatic translation will ever get so good to really translate any literary work longer than a haiku, all by itself AND with the same quality of a human translator with a solid literary education in both the source and target language. But whoever really thinks, in 2022, that automatic translation is already at that level sounds as a pretty ignorant and limited person to me, no matter how good they may be in programming, or any other technical field. It’s sad, really.

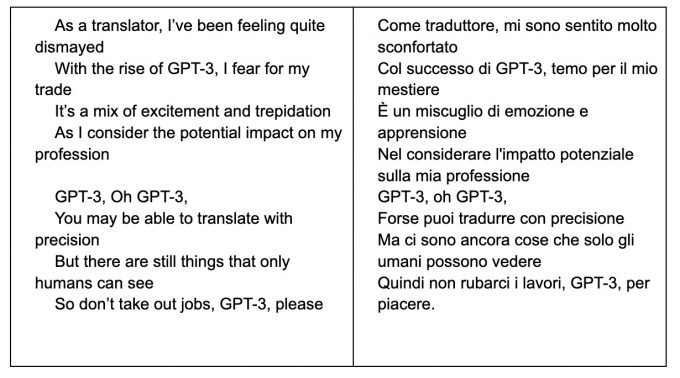

Sample poetry written by chatGPT

</em></u>

Probability is key

The other important point in Latronico’s post is his explanation of what chatGPT or automatic translators do, which I summarize in the following paragraphs.

A language model is essentially a huge statistical machine. It analyzes billions of billions of words (this is the “learning” of “machine learning”), and calculates how likely it is that, given a certain series, one will follow rather than another. By extending the process recursively, it is possible to generate texts which are formally correct, pertinent and often coherent. For example, if you feed chatGPT to write something to go with the title “[Brand] announces the launch of innovative [name] watch series, the statistically probable text that may follow is a press release. Therefore, chatGPT will write something similar to all the press releases it was trained with, and if it places the “Brand” in Milan it will be simply because it noticed in the same text that “it is more likely for a luxury watch brand to be based in Milan than in Malmö”.

If this is what happens, it has three very interesting consequences. One is that the texts that anything like chatGPT may consistently assemble with a high average quality and little to none human polishing afterwards, are exactly, and only, those in which probability is an effective guide. That is, the same formulaic, and to some extent mechanical texts that a dumb but omniscient human slave may write, be they press releases, contracts, (some) translations and, of course, software. Meaning that “those responsible for automation risk being automated themselves”. Last but not least, according to Latronico, is the fact that the very architecture of these systems makes perfection difficult to achieve, because the underlying “machine learning” models are trained with all available texts including the wrong ones, that will therefore appear, sooner or later, with a probability always greater than zero.

The risks: ignorance, and what Zuckerberg said

Personally, I do not know machines learning algorithms and their theoretical bases enough to be sure that what Latronico writes is completely correct. I just strongly suspect it is, for two reasons. A functional illiterate is “a person that cannot use reading, writing, and calculation skills for his/her own and the community’s development”, and I strongly fear that there are already enough such people around, even among PhDs, to make certain translations “good enough for everybody”. The other reason is the almost explicit push, from many sides, to make people use fewer and fewer words.

Even a professor says so

(not) Ironically, just a few days after Latronico’s post, an italian university professor explained on YouTube how and why he thinks Russia violated the 1994 Budapest Memorandum. Among his sources he explicitly mentioned a New York Times article by a “William J. Ampio”, spelling out that surname, “A, M, P, I, O” to make it easier for everybody to check his sources. Fact is, there is no trace of no W.J. Ampio in the NYT website. But there is a W.J. Broad, and “ampio” is the literal italian translation of “broad”. That professor, that is, was almost surely reading without much attention a Google translation of some article by W.J. Broad. Which is exactly the point made above.

Who writes this, why, and how to help

I am Marco Fioretti, tech writer and aspiring polymath doing human-digital research and popularization.

I do it because YOUR civil rights and the quality of YOUR life depend every year more on how software is used AROUND you.

To this end, I have already shared more than a million words on this blog, without any paywall or user tracking, and am sharing the next million through a newsletter, also without any paywall.

The more direct support I get, the more I can continue to inform for free parents, teachers, decision makers, and everybody else who should know more stuff like this. You can support me with paid subscriptions to my newsletter, donations via PayPal (mfioretti@nexaima.net) or LiberaPay, or in any of the other ways listed here.THANKS for your support!