Suicide, 3D printed and AI-monitored

Heck, why didn’t anybody think this before?

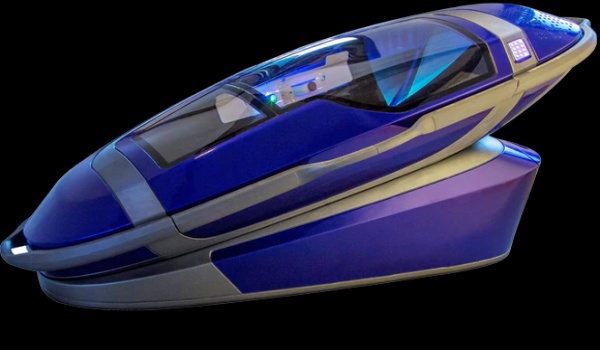

Did you know that a “suicide pod” designed to make it easier “dying out of doctors' hands with AI and 3D printing” passed a legal review in Switzerland last December?

I didn’t, and partly it may be because, as of March 2022, it’s not true. But regardless of the outcome of that legal review, the whole concept deserves some discussion, as some of its parts are… puzzling.

Demedicalisation of death? Next question, please.

As far as I understand this pod, called Sarco, would circumvent restrictions on assisted suicide and “demedicalises death” because it can be only operated from the inside. The person who needs it sits inside, closes the pod and pushes a button all by himself, without any need to convince anyone else, medic or not, to “assist” in any way. I have no expertise or intention to comment on this in any way, so let’s look at the rest.

The place of 3D printing in assisted suicide

Initially, I thought that the 3D printing and AI (Artificial Intelligence) parts were totally irrelevant fluff, buzzwords put there just to get clicks and sales.

Then I discovered that at least the 3D printing has a real purpose in the picture, maybe debatable but surely concrete, and it’s not money. The pod, called Sarco, is promoted by an assisted dying advocacy organization, that wants to “allow anyone to download the design and 3D-print it themselves”. Also, says the FAQ, that pod “is not and never will be for sale”. So far so good, more or less.

The joke: “this is restricted”

From the same piece: “While in theory anyone could print the Sarco, Exit International will not provide the blueprints to anyone aged under 50 years old, and even once printed access to the capsule will remain restricted, according to information on the organisation’s website."

Yeah, right. The Sarco design files are special files that cannot be copied, are hosted on servers that nobody will ever succeed to crack, and will be only downloaded by users with equally unbreakable computers. Sure. Or, by the time I am writing this, those files have already been copied on God knows how many unhautorized computers.

It would have been more serious to say something actually possible, like “Relax! This thing is so specialised, complex and expensive that nobody will bother to do it illegally. Because, with or without 3D printing, there are much cheaper, equally painless and equally effective ways to kill yourself”. And indeed, this FAQ does points out that “A Sarco will never lend itself to rash, impulsive action (irrational suicide)".

Just for completeness, not that it really matters in the big picture: even the claim that the Sarco “can be only operated from the inside” is laughable. Everybody with enough money, equipment and skills to 3D print one of those things will also be able to move that button outside in minutes.

The creepier part: AI screening

“In the future, an AI screening process will allow [that organization] to “demedicalise” the dying process by removing the need for medical professionals to be involved”. OK, now I feel good. Not.

According to Gizmodo, that AI screening system should “establish the person’s mental capacity… [the] original conceptual idea is that the person would do an online test and receive a code to access the Sarco”.

In another interview, the project leader said “We want to remove any kind of psychiatric review from the process and allow the individual to control the method themselves."

I don’t really know what to make of this. As a minimum, it’s simply false that there is no “psychiatric review” here. There still is one alright, and there will always be. It will just be hidden in the “AI screening” software that was designed according to the biases, psychiatric data and knowledge (or lack thereof) present in the brains of its programmers.

If anything, performing the mental capacity assessment, psychiatric review or whatever you want to call it with software will make it less accountable. Which I understand may be the whole point, but no thanks.

(added on 2023/01/05, just in case you needed even more arguments to get why the AI screening part of this thing is a bad idea: Artificial Intelligence just cannot handle certain problems)

Who writes this, why, and how to help

I am Marco Fioretti, tech writer and aspiring polymath doing human-digital research and popularization.

I do it because YOUR civil rights and the quality of YOUR life depend every year more on how software is used AROUND you.

To this end, I have already shared more than a million words on this blog, without any paywall or user tracking, and am sharing the next million through a newsletter, also without any paywall.

The more direct support I get, the more I can continue to inform for free parents, teachers, decision makers, and everybody else who should know more stuff like this. You can support me with paid subscriptions to my newsletter, donations via PayPal (mfioretti@nexaima.net) or LiberaPay, or in any of the other ways listed here.THANKS for your support!