The problem with some Artificial Intelligence is NOT discrimination

It’s that it simply does not work.

I have written several times about how existing Artificial Intelligence (AI) software can discriminate its targets. This time, I’d like to point you to a great summary of the real, “upstream” problem:

no matter how much they are marketed as THE solution to ALL sorts of problems, AI and machine learning simply cannot work in some domains

This is well explained, with plenty of examples that you really should read, in a slideshow titled “How to recognize AI snake oil”, whose main conclusions are that:

- Companies advertising AI as the solution to all problems have been helped along by credulous media [and policy makers]

- Certain AI systems, for example those screening job candidates are " essentially elaborate random number generators"

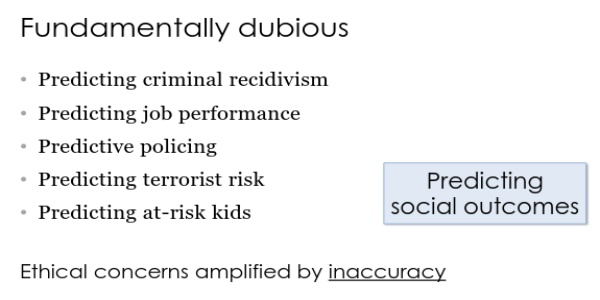

- the most “fundamentally dubious” AI applications are indeed those made to “predict social outcomes” from criminal recidivism to job performance

If AI and machine learning just cannot do certain jobs

This has two consequences. One is that, if algorithmic decision is unsuited to certain tasks to begin with, finding when it discriminates is certainly possible, as discussed in this paper, but at best it only makes the algorithms FAIL… more fairly than before.

The other obvious consequence is that there is little, possibly very little substance in the belief that human oversight may significantly correct and improve algorithmic decision-making, once it has been implemented. Assuming all the stakeholders know that it was implemented, of course, which too often is NOT the case.

The only solution, discussed in the last link, is to limit the damage by “incorporating an algorithm into decision-making” only when:

- first, it can be demonstrated that it is appropriate (and actually useful)

- and only after those proofs HAVE been carefully reviewed and approved before the algorithms are actually adopted

Luckily, those are decisions that should be fairly easy to take without any algorithm. Right?

Who writes this, why, and how to help

I am Marco Fioretti, tech writer and aspiring polymath doing human-digital research and popularization.

I do it because YOUR civil rights and the quality of YOUR life depend every year more on how software is used AROUND you.

To this end, I have already shared more than a million words on this blog, without any paywall or user tracking, and am sharing the next million through a newsletter, also without any paywall.

The more direct support I get, the more I can continue to inform for free parents, teachers, decision makers, and everybody else who should know more stuff like this. You can support me with paid subscriptions to my newsletter, donations via PayPal (mfioretti@nexaima.net) or LiberaPay, or in any of the other ways listed here.THANKS for your support!