AI will never give you driverless cars

Unless it DOES become like the human brain, that is.

There is a piece just posted on Medium that may be, for all I know, completely wrong. But if it isn’t, it may be the most fascinating thing that you may read this year about Artificial “Intelligence” (AI), as well as the theoretical reason (besides the many practical ones) why really driverless cars will fail: in one sentence, it is because the artificial intelligence of today works in a way that is completely different than that of the real intelligence of the human brain, and therefore is a dead end.

How the Artificial Intelligence of TODAY works

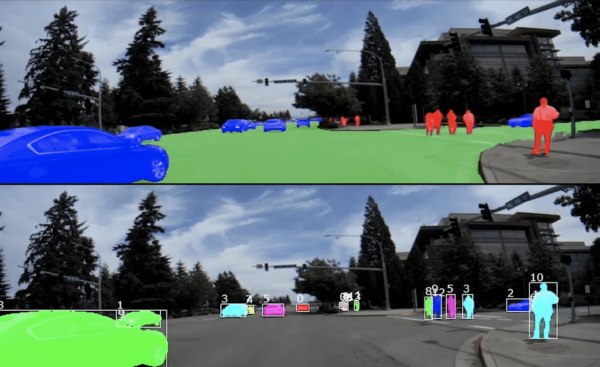

<u><em><strong>CAPTION:</strong>

<a href="https://neptune.ai/blog/self-driving-cars-with-convolutional-neural-networks-cnn" target="_blank">How driverless cars see the roads, thanks to deep learning (click for source)</a>

</em></u>

The most successful form of Artificial Intelligence used today, deep learning, is a “rule-based expert system”. This simply means that deep learning:

- first uses powerful computers and LOTS of pre-categorized data to automatically create rules “in the form, if A then B, where A is a pattern and B a label or symbol representing a category.".

- then uses the models, or representations described by those sets of rules to recognize what it is looking at (e.g. human faces, texts or anything else), and react accordingly

How the human brain works

Unlike computers, the brain has not enough capacity to store accurate models of the world. Besides, even it did have the capacity, the brain would be too slow anyway to process and update those models in real time, if anything in the real world moved.

Therefore, unlike deep learning, the human brain does not create internal representations of objects in the world. It must, instead, continually sense the world in real time in order to interact with it.

Whenever it meets a new pattern, the brain instantly accommodates existing perceptual structures to see the new pattern. No new structures are learned.

The brain, that is, does not model the world at all. It just learns how to see the world directly.

And that is where the problems start

The deep learning approach that builds and uses models is not intrinsically bad. Its real problem is just that those models, and the processes based on them are are brittle. Deep learning models must be trained with many samples (e.g. many images of a certain type) before they can even attempt to recognize objects. But as soon as they face a situation for which there was no usable sample, that is no “rule”, they fail. Therefore, letting those models loose outside strictly controlled environments (e.g. automated factories) is asking for trouble.

But this, argues that Medium post, is exactly what happened back in 2016 to one of Tesla Motors’s cars while on autopilot. The neural network failed to recognize a situation and caused a fatal accident.

The obvious consequence, concludes that post, is that the Artificial Intelligence community will never solve the problem of truly generalized intelligence (which is mandatory, if you really want really “driverless” cars!) unless they completely abandon that approach, acknowledging that computer vision as it is today works too differently from human vision to ever become equally intelligent.

Fascinating, isn’t it? Especially for Tesla stockholders, of course.

Who writes this, why, and how to help

I am Marco Fioretti, tech writer and aspiring polymath doing human-digital research and popularization.

I do it because YOUR civil rights and the quality of YOUR life depend every year more on how software is used AROUND you.

To this end, I have already shared more than a million words on this blog, without any paywall or user tracking, and am sharing the next million through a newsletter, also without any paywall.

The more direct support I get, the more I can continue to inform for free parents, teachers, decision makers, and everybody else who should know more stuff like this. You can support me with paid subscriptions to my newsletter, donations via PayPal (mfioretti@nexaima.net) or LiberaPay, or in any of the other ways listed here.THANKS for your support!