Why judges should NEVER START from algorithms...

and even less END with them.

It so appears (*) that, in 2016, US judges, probation and parole officers were already “increasingly using algorithms to assess a criminal defendant’s likelihood of becoming a recidivist - a term used to describe criminals who re-offend."

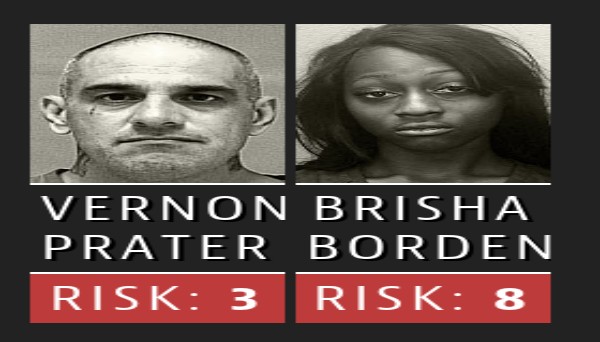

And it also seems that things were not, so to speak, going equally well for everybody.

An analysis of one of the most used software tools of this kind, called COMPAS (which stands for Correctional Offender Management Profiling for Alternative Sanctions), found that “black defendants were far more likely than white defendants to be incorrectly judged to be at a higher risk of recidivism, while white defendants were more likely than black defendants to be incorrectly flagged as low risk”.

More in detail, a comparison of “the recidivism risk categories predicted by the COMPAS tool to the actual recidivism rates of defendants in the two years after they were scored [found] that the score correctly predicted an offender’s recidivism 61 percent of the time, but was only correct in its predictions of violent recidivism 20 percent of the time”.

What do the developers say?

[One of the developers of COMPAS] testified that he didn’t design his software to be used in sentencing. “I wanted to stay away from the courts,” he said, explaining that his focus was on reducing crime rather than punishment. “But as time went on I started realizing that so many decisions are made, you know, in the courts. So I gradually softened on whether this could be used in the courts or not.”

Still, the developer testified, “I don’t like the idea myself of COMPAS being the sole evidence that a decision would be based upon.”

All this sounds like just another consequence of prisons being a business, doesn’t it?

(*) the technical details, that is how the study was performed, on which data etc… are all here