Schools keep using algorithms they don't understand

Because, money, of course. Or lack thereof.

Major universities are using advising software that uses students' race, among other variables, to predict how likely they are to drop out of school, says The Markup.

The practical consequence is higher probability that college advisors tell Black and other minority students not to aim for certain majors, perpetuating and increasing “educational steering”.

How higher? The article says that there is a university in the USA where “Black women are 2.8 times as likely to be labeled high risk as White women, and Black men are 3.9 times as likely to be labeled high risk as White men”.

I mean, just MEASURE HER. How could she ever succeed in college?

</em></u>

An unchangeable past means an unchangeable FUTURE

Besides, or before race, the software is “trained” also on historic student data, anywhere from two to 10 or more years of student outcomes from each individual client school. This can surely help a lot to find, or demonstrate, where and when the problems really started, that is where and what to do to end them for good… in a decade or two, at least.

But before we get there, every young person seeking admission to a college today is a totally different human being than she was 10 years earlier. If data so old become significant factors when deciding the future of such a young person, “you’re necessarily encoding lots of racist and discriminatory practices that go uninvestigated”.

Of course, all human beings are unique, reality is complex, and one’s personal past and achievements are relevant. Someone who consistantly struggled to finish math homework for 12 years may be well advised to not pursue a degree in Math, or Physics. And someone who never, ever read a book that wasn’t assigned as homework should probably not invest further time on Literature, or Philosophy courses, no matter how high his scores were in high school (yes, I’ve known students like that). But no such advice must seriously rely on certain parameters.

Why does this happen? Money, of course.

Money, and its tendency to concentrate where it already is. Schools and Universities cannot waste money they don’t have, even if this means no education for a better future:

- “The rise of predictive analytics has coincided with significant cuts in government funding for public colleges and universities."

- Racially influenced risk scores just “reflect the underlying equity disparities that are already present on these campuses and have been for a long time."

The way out

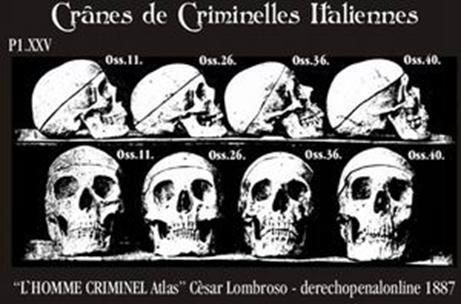

Racism and other arbitrary discriminatory practices have always existed, everywhere. They were always stupid, and evil, and could take the most diverse forms, or targets. Just ask Lombroso, and his measures of skulls of italian criminals:

What is different now is that, even if made sense to fix discrimination inside, or with, college advice software, that is only after one has been discriminated for her most influential years, you cannot fix what you don’t know.

Everybody with a caliber and a minimum of intellectual honesty can, after an hour of open conversation with another individual, discover how stupid it is to issue a verdict on the human nature and value of that specific individual on the basis of “wrong” skull measures, skin color, or any other purely physical parameter.

With software like that, it’s a totally different matter. Student advisers say they’re given little guidance on how to use the risk scores, but who could give such guidance, if, quoting some advisers interviewed by The Markup:

- “I certainly haven’t had a lot of information from behind the proprietary algorithms”

- “What I learned…, over and over, about the process was that the algorithm to determine risk was proprietary and protected”

So, the first thing to do in cases like these is to open, and publish the algorithms. The second is to never let them have the last word, especially about the future of any individual.