What's happening with Ethics in Artificial Intelligence?

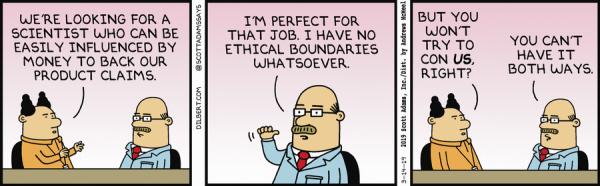

Don’t you dare to REALLY watch the watchmen. Especially “ethic” ones.

Something worrying happened in Google in December 2020, is still unfolding, and is a symptom of much more general problem. This post is an attempt to summarize the main points of the story so far, in the public interest.

The background: who is Timnit Gebru?

Timnit Gebru is a widely respected leader in AI ethics research, known among other things for studying how facial recognition may discriminate against women and people of color. Google hired Gebru to lead a research group on accountability, fairness and ethics in artificial intelligence, in order to improve and practice its own ethical AI principles.

Then, in December 2020, Google fired Gebru. The problem was a paper, by Gebru and others, that highlights the risks of something that is crucial for Google, that is “large language models” or, in simpler English, software that can converse with people.

The Google Walkout movement immediately supported Gebru, publishing many details about the firing, and how Google managed the paper.

Update, 2021/03/01: in early February 2021, an engineering director and a software developer quit Google over the dismissal of Gebru. A couple of weeks later, Google fired another of its top Artificial Intelligence (AI) researchers. It seems that the researcher, who had co-led the “ethics in AI” team with Gebru for about two years, had violated the company’s code of conduct and security policies by “using an algorithm to comb through her work communications, looking for evidence of discrimination against Gebru”.

What does that paper say?

According to this article, the main concerns expressed in the paper are about:

Environmental footprint, and monopolies

Training large AI models consumes a lot of electricity, at rates that have been “exploding since 2017”. Besides, if using those models requires so much energy and hardware, only very rich organizations can use them, “while climate change hits marginalized communities hardest”. The conclusion would be that “It is past time for researchers to prioritize energy efficiency and cost to reduce negative environmental impact and inequitable access to resources”.

Surveillance, discrimination and perpetuation of biases

The models collect all the data they can from the internet, so there’s a risk that racist, sexist, and otherwise abusive language ends up in the training data. And since the training data sets are so large, it’s hard to audit them to check for these embedded biases

“Misdirected research effort”

Though most AI researchers acknowledge that large language models don’t actually understand language and are merely excellent at manipulating it, Big Tech can make money from models that manipulate language more accurately, so it keeps investing in them. “This research effort brings with it an opportunity cost… Not as much effort goes into working on AI models that might achieve understanding”.

Giving each concern the right weight

IT seems that training one of those language models that can communicate with people generates as much emissions as five cars, over their whole lifetime. In and by itself, it may seem a lot, but in reality is almost nothing, compared to the many millions of cars that clog streets and lungs, all too often for no real need. Or to the impact of industrial agriculture and farming, or concrete, or high speed trading, and many other not exactly rational activities. Besides, model training is not something that happens every day, or week. At least so far.

The real problems with the pollution created by one language model are that:

- first of all, priorities. Biased language models that are only used by multinationals would harm people even if their training consumed half a car, instead of five

- if the only way to eliminate discrimination and biases in a language model is to have thousands and thousands of them, that take into account differences among languages, cultures, context and so on… and to re-train them constantly, as the paper implies… we are not talking five cars anymore. In other words, what if it turns out that “ethic Artificial Intelligence” and “environmental sustainability are mutually exclusive goals? What would Wall Street say?

What can we conclude so far

In this story there are, at least:

- Lack of diversity: it’s important to empower researchers who are focused on AI’s impacts on people, particularly marginalized groups. Even better would be funding researchers who are from these marginalized groups.

- Huge conflicts of interests in research over these technologies, because only the few big companies that are making huge money from them can fund, and are funding, such research. Those firings prove that in-house AI ethics researchers have little or no control on what their employers do. Not just inside Google, of course: “A 2020 study found that at four top universities, more than half of AI ethics researchers whose funding sources are known have accepted money from a tech giant."

- Solutionism, again: Big Tech steers every conversation about ethics in AI towards solutionism, that is purely technical fixes to social problems (that often are intensified by tech): “Google’s main critique of Gebru’s peer-reviewed paper… [was that it] didn’t reference enough of the technical solutions to the challenges of AI bias and outsized carbon emissions”.

- Self-perpetuation of “Big Tech: if the only way to have a useful and “not-evil” AI is to use even more money, energy and data than today, the unavoidable conclusion is that only who is already “Big Tech” today can lead society there, and nobody should hinder their plans

Who writes this, why, and how to help

I am Marco Fioretti, tech writer and aspiring polymath doing human-digital research and popularization.

I do it because YOUR civil rights and the quality of YOUR life depend every year more on how software is used AROUND you.

To this end, I have already shared more than a million words on this blog, without any paywall or user tracking, and am sharing the next million through a newsletter, also without any paywall.

The more direct support I get, the more I can continue to inform for free parents, teachers, decision makers, and everybody else who should know more stuff like this. You can support me with paid subscriptions to my newsletter, donations via PayPal (mfioretti@nexaima.net) or LiberaPay, or in any of the other ways listed here.THANKS for your support!