Artificial Intelligence is like Jessica Rabbit

It’s not bad. WE just draw it that way.

One month ago I commented how Artificial Intelligence (AI)-supported healthcare depends on where you live. Now, there is more of the same, to make the whole issue even clearer.

Scientific American and ZDNet report that Health Care AI Systems Are biased, when not actually racist.

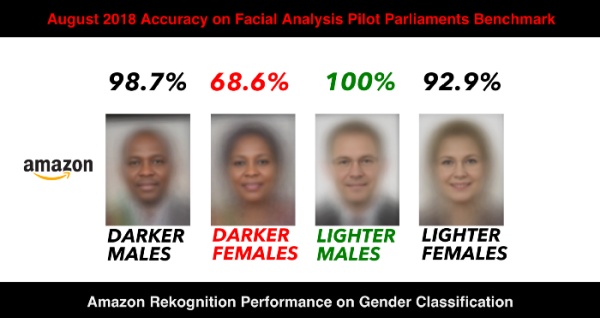

<u><em><strong>CAPTION:</strong>

<a href="https://medium.com/@Joy.Buolamwini/response-racial-and-gender-bias-in-amazon-rekognition-commercial-ai-system-for-analyzing-faces-a289222eeced" target="_blank">Source: Racial and Gender bias in Amazon Rekognition</a>

</em></u>

As it happens in many, if not all, cases like this, the problem is not “Artificial Intelligence”, which is just sofware, and even much less intelligent than its name may have you believe.

The problem starts well before

Here are a few quotes from both articles to help me explain what it is.

- Six algorithms used on an estimated 60-100 million patients nationwide were prioritizing care coordination for white patients over black patients for the same level of illness (here is one example)

- algorithms trained on costs in insurance claims data, predicted which patients would be expensive in the future based on who was expensive in the past.

- But since, historically, less is spent on black patients than white patients, simply becaus the former are underserved by healthcare (that is, at greater risk), the algorithm ended up perpetuating existing bias in healthcare

- AI will be soon critical to hospitals' survival in the near future, particularly as the pandemic bleeds providers of revenue

- One study found hospitals that shared data were more likely to lose patients to local competitors

- To solve these problems, some organizations have started baking inclusivity and fairness into the data-gathering process… For instance, Google recently released an AI breast cancer screening tool it’s been testing to perform equally well across different geographical regions.

Where the REAL problems are, or begin

Personally, practices like “predicting which patients would be expensive in the future based on who was expensive in the past”, seem the same to me, as “rating patients by their profitability” (or at least, tending dangerously in that direction): intrinsically obscene, that is. It may be a US-only problem, but feels real bad anyway, sorry. Regardless of AI, and before AI. Same here:

“The best practices and governance of AI in healthcare should include the release of factsheets containing fairness test results and should involve multi-stakeholder participation on validating the entire AI lifecycle and also the organizational/human processes that surround the AI system."

The paragraph above, from one of the articles linked, is something that should be a given in any decent society… well before the arrival of AI, and regardless of it. Remove “AI” from that paragraph, and it will still make perfect sense, and be perfectly obvious. Or should.

Last but not least… Google? Google?

It is not good that Google, of all actors, does its own development of breast cancer screening, or anything else healthcare. Funding it, yes. Contributing data to it, to independent, accountable researchers, yes. The more the better. But doing it autonomously, because that seems the meaning of that quote, is not good, no.

Who writes this, why, and how to help

I am Marco Fioretti, tech writer and aspiring polymath doing human-digital research and popularization.

I do it because YOUR civil rights and the quality of YOUR life depend every year more on how software is used AROUND you.

To this end, I have already shared more than a million words on this blog, without any paywall or user tracking, and am sharing the next million through a newsletter, also without any paywall.

The more direct support I get, the more I can continue to inform for free parents, teachers, decision makers, and everybody else who should know more stuff like this. You can support me with paid subscriptions to my newsletter, donations via PayPal (mfioretti@nexaima.net) or LiberaPay, or in any of the other ways listed here.THANKS for your support!