Less responsibilities for algorithm-driven civil servants?

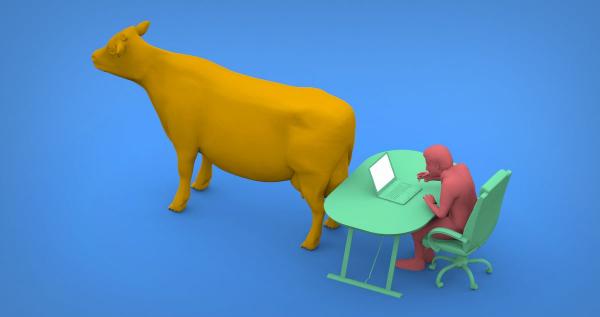

Why have such servants, then?

Locked down by coronavirus, Italy is trying to plan what comes, or should come after. Including innovation in Public Administrations (PAs), to make them more efficient, responsive and resilient. A major proposal discussed in these days, called Colao Plan after its coordinator, contains a potentially worrying action about PAs.

Simplification of PA procedures, says point 23 of the Plan, should also come by “reducing the scope of responsibilities of civil servants, following their adoption of procedures ruled by algorithms”.

From Public Administration and Algorithmic Decision:

Italian courts have already ruled that automatic, or partially automatic decisions by Public Administration must respect all the following principles:

- “Knowability”: citizens have the right to know which automatic processes decide, or drive decisions about them, and how they work

- Non-exclusivity: decisions that do have juridical effects on people should not be taken exclusively by automatic procedures

- No algorithmic discrimination: algorithms should not discriminate any given group (Spoiler: it happens a lot)

Besides:

- Algorithm-based decisions must come with comprehensible explanation of how they worked, and which laws and regulations they implemented

- Citizens must always have the concrete possibility to know which specific PA office is imputable for the automatic decision

Judging from the experiences with “artificial intelligence” so far, “knowing how an algorithm worked” is sometimes so hard to be practically impossible.

The “imputable” part is much simpler, at least on paper, also because it is a direct consequence of a 2018 EU resolution on Civil Law Rules on Robotics, that among other things states that:

“whereas in the scenario where a robot can take autonomous decisions, the traditional rules will not suffice to give rise to legal liability for damage caused by a robot, since they would not make it possible to identify the party responsible for providing compensation and to require that party to make good the damage it has caused"

(VERY GENERAL!!!) Conclusion

That action of the Colao Plan (or any other similar action in any country, ever, because this is the point of this post) must be:

- Realized without relieving of all responsibilities the Public Administration that implemented the automatic process

- Clearly say who pays the bills for the errors made by any specific algorithm

- Not be a mere digital, but literal translation of existing, likely overcomplicated processes

Who writes this, why, and how to help

I am Marco Fioretti, tech writer and aspiring polymath doing human-digital research and popularization.

I do it because YOUR civil rights and the quality of YOUR life depend every year more on how software is used AROUND you.

To this end, I have already shared more than a million words on this blog, without any paywall or user tracking, and am sharing the next million through a newsletter, also without any paywall.

The more direct support I get, the more I can continue to inform for free parents, teachers, decision makers, and everybody else who should know more stuff like this. You can support me with paid subscriptions to my newsletter, donations via PayPal (mfioretti@nexaima.net) or LiberaPay, or in any of the other ways listed here.THANKS for your support!