Takeaway from a ridiculous YouTube copyright case

Automatic content filtering does not work. Period.

This month, a department of the New York University School of law had to explain in detail “how the most sophisticated copyright filter in the world prevented them from… explaining copyright law!”

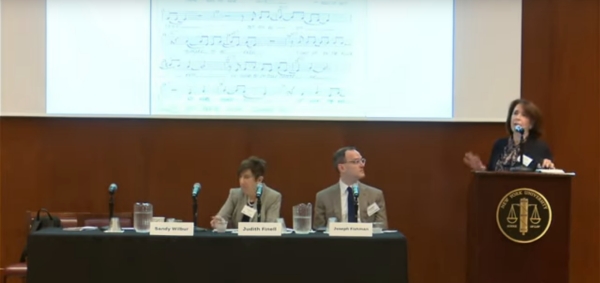

Earlier on, they had posted on YouTube the video of a debate over “how and when one song can infringe the copyright of another”.

That video was automatically “flagged by YouTube’s Content ID system for multiple claims of infringement” by one of the music companies that own the song used as example in the debate.

And then they had to use all their knowledge, and clout, wasting a lot of time and money for no good reason, to make YouTube cancel the claims, and put the video back online. This is what they learned (nothing really new, alas, but it is useful anyway to have such recent and detailed proofs):

First, how much it is challenging to dispute an allegation of infringement by large rightsholders… even for a center that is “home to some of the top technology and intellectual property scholars in the world, as well as people who have actually operated the notice and takedown processes for large online platforms. For an average Joe, it would be impossible, period. Even if he is surely right.

Second, it highlights the imperfect nature of automated content screening and the importance of process when automation goes wrong.

A system that assumes any match to an existing work is infringement needs a robust process to deal with the situations where that is not the case: “No matter how much automation allows you to scale, the system will still require informed and fair human review at some point”

Third: it highlights the costs of things going wrong. The YouTube copyright enforcement system is likely the most expensive and sophisticated copyright enforcement system ever created. If even this system has these types of flaws, it is likely that the systems set up by smaller sites will be even less perfect.

Executive summary: automated online censorship, I mean: content filtering, simply cannot work alone. It simply can’t. Never forget it.

(This post was drafted in March 2020, but only put online in August, because… my coronavirus reports, of course

Who writes this, why, and how to help

I am Marco Fioretti, tech writer and aspiring polymath doing human-digital research and popularization.

I do it because YOUR civil rights and the quality of YOUR life depend every year more on how software is used AROUND you.

To this end, I have already shared more than a million words on this blog, without any paywall or user tracking, and am sharing the next million through a newsletter, also without any paywall.

The more direct support I get, the more I can continue to inform for free parents, teachers, decision makers, and everybody else who should know more stuff like this. You can support me with paid subscriptions to my newsletter, donations via PayPal (mfioretti@nexaima.net) or LiberaPay, or in any of the other ways listed here.THANKS for your support!