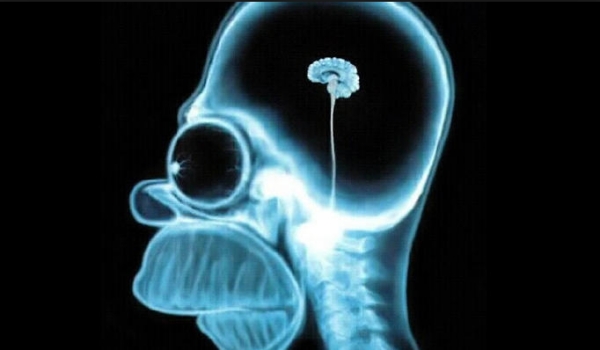

Fear superstupidity, not (artificial) superintelligence

Just a few things already said, but WELL said.

You should fear Super Stupidity, not Super Intelligence, said D. Pereira about one year ago. The few paragraphs below explain why, summarizing his own words with some extra comment and link by me.

Weak, not real Artificial Intelligence (AI)

Today we are still living in the era of weak or narrow AI, very far from general AI, and even more from a potential Super Intelligence. However we call it, this technology is a huge opportunity to put algorithms to work together with humans to solve some of our biggest challenges: climate change, poverty, health and well being, etc.

The problem is that we are not really worrying enough about those problems, and that may be Super Stupidity, not Super Intelligence (artificial or not). As a minimum, the current attitude of most media, lawmakers, and people in general is “a dangerous distraction because the rise of computing itself brings to us”, from unemployment to digital neocolonialism, or access to quality education, or to fair and effective healthcare.

For example, what we call AI or machine/deep learning these days is making us face big challenges around task (not job) automation: more and more, we will need to see jobs as a combination of tasks, some of them repeatable and lacking creativity (therefore subject to automation) and those which are not (therefore still for humans to perform).

Superintelligence that does not tackle these problems first is only superstupidity: “Let’s focus on real problems, and how to use the incredible technology yet at our disposal for our own good”. This, however, should happen without “falling into technology overregulation”.

I could not agree more, and these are just a few of the many reasons why I say so:

Who writes this, why, and how to help

I am Marco Fioretti, tech writer and aspiring polymath doing human-digital research and popularization.

I do it because YOUR civil rights and the quality of YOUR life depend every year more on how software is used AROUND you.

To this end, I have already shared more than a million words on this blog, without any paywall or user tracking, and am sharing the next million through a newsletter, also without any paywall.

The more direct support I get, the more I can continue to inform for free parents, teachers, decision makers, and everybody else who should know more stuff like this. You can support me with paid subscriptions to my newsletter, donations via PayPal (mfioretti@nexaima.net) or LiberaPay, or in any of the other ways listed here.THANKS for your support!