Two implications of the end of the Moore's Law

What do we do when computers stop being more powerful every year?

The MIT Technology Review just wrote that Moore’s Law fueled prosperity of the last 50 years, but the end is now in sight.

Fifty-five years ago, Gordon Moore forecast that the number of components on an integrated circuit would double every year until, and that this would lead society to the promised land, namely “such wonders as home computers—or at least terminals connected to a central computer—automatic controls for automobiles, and personal portable communications equipment”. And almost all the MIT’s “most important breakthrough technologies of the year” since 2001 are possible “only because of the computation advances described by Moore’s Law”.

What does this mean NOW?

The article describes how and why Moore’s law may be finally coming to an end. Two effects of this scenario have particularly interesting implications (for me, at least):

1: “those developing AI and other applications will miss the decreases in cost and increases in performance delivered by Moore’s Law… really smart people in AI who aren’t aware of the hardware constraints facing long-term advances in computing”

My translation: if you are investing in AI stocks, please seriously reconsider the wisdom of such an investment.

2: “Wanted: A Marshall Plan for chips, because general economic growth cannot continue without improvements of microchips”

My translation (at least for Europe): the time for European microprocessors and FPGAs that I proposed in 2012 is coming!

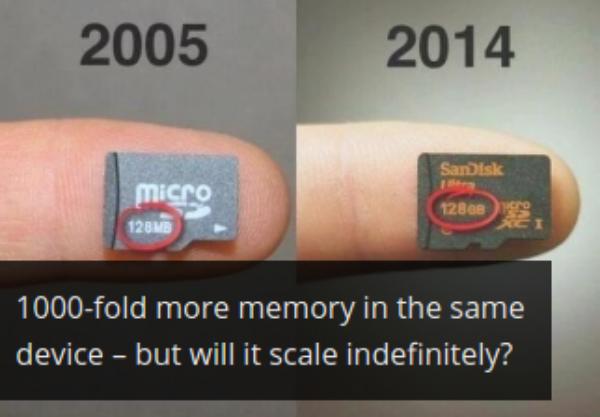

Image source: “Of Memes and Memory and Moore’s Law”

Who writes this, why, and how to help

I am Marco Fioretti, tech writer and aspiring polymath doing human-digital research and popularization.

I do it because YOUR civil rights and the quality of YOUR life depend every year more on how software is used AROUND you.

To this end, I have already shared more than a million words on this blog, without any paywall or user tracking, and am sharing the next million through a newsletter, also without any paywall.

The more direct support I get, the more I can continue to inform for free parents, teachers, decision makers, and everybody else who should know more stuff like this. You can support me with paid subscriptions to my newsletter, donations via PayPal (mfioretti@nexaima.net) or LiberaPay, or in any of the other ways listed here.THANKS for your support!