Let self-driving cars kill pedestrians. Or not?

They made a survey about driverless cars and found… basic biology. What does this mean?

I just read here that, in one survey,

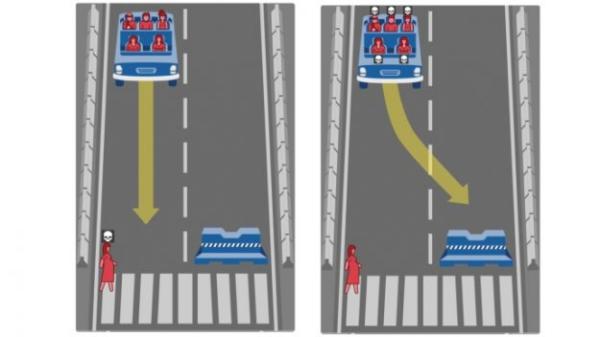

- “76% of people agreed that a driverless car should [be programmed to] sacrifice its passenger rather than plow into and kill 10 pedestrians, to minimize deaths”

- But when people were asked whether they would buy a car controlled by such a moral algorithm, “their enthusiasm cooled”. Those surveyed said they would much rather purchase a car programmed to protect themselves instead of pedestrians."

Self-preservation instinct, working as designed?

What a surprise, right? The same post notes that Mercedes solved this problem in 2016. It decided to program its cars to kill everybody else before its own paying customers, I mean drivers.

In another poll of about 2000 people, the majority believed that “autonomous cars should always make the decision to cause the least amount of fatalities”.

Decisions? Which decisions?

The Mercedes “decision” is not a decision. It’s just business as usual. Heck, unless laws kick in, Mercedes may even make both a “kill me " and a “kill others” version of their software, making the first the default, and the latter an optional, expensive upgrade. Capitalism is wonderful.

The wish for the “decision that causes the least amount of fatalities” is really naive, instead. At least if you really mean it.

Remember that, eventually, these wishes must become enforceable, well defined laws and insurance policies. And there are at least two big obstacles to implement that decision.

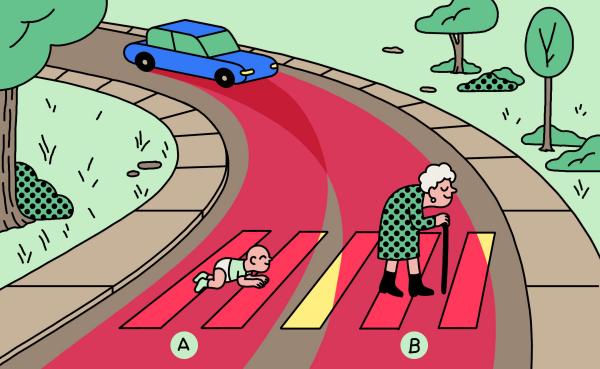

One is that, if you take it literally, you need nothing less than a crystal ball to really know in advance “which decision causes the least amount of fatalities”. And that is even in the purely ideal case that the image recognition software never classifies an occupied baby carrier as a backpack, a dog as a baby, a long single file as one person, or a person of color as non-human. Or vice-versa.

Another big issue is that not all lives have the same value. And I am not talking about “my life vs yours”. I’m talking of deciding who to hit between an apparently healthy kid and someone very old, or maybe in a wheelchair.

Your license and algorithm, please

I’m also talking of the fact that car drivers want freedom and car makers want, no: need to sell globally. But, as debatable as they are, life value assessments (and the laws mirroring them) vary from country to country.

Moral, driverless cars vs Brexit

Theoretically, a driverless car able to save lives should stop at every border to load the sofware valid on the other side. Assuming it can run it. I can’t wait to see a poll asking all residents of the island of Ireland how the post-Brexit UK-EU trade deals should concretely regulate moral, driverless cars.

The real solution: change the problem

All this makes it quite likely that, with big enough numbers, the most sophisticated fatalities-reducing algorithms won’t save more lives than one deciding which way to drive the car randomly.

Let self-driving cars kill some pedestrians. You have no other choice. Unless you go back to reality, and reduce and redefine the whole problem, of course.

Reality is not just that no algorithm can satisfy impossible expectations. Reality is that usage of private cars exactly like, and as much as in the 20th century is not possible anymore, regardless of who drives or what fuel is used. Reality means, in this order:

- Worldwide replacement of private cars with mass transit and ride hailing, to the maximum possible extent

- Management of self driving cars as on demand micro trains

- Acceptance of deaths by driverless cars, because they will be much, much less than today

Image sources:

Who writes this, why, and how to help

I am Marco Fioretti, tech writer and aspiring polymath doing human-digital research and popularization.

I do it because YOUR civil rights and the quality of YOUR life depend every year more on how software is used AROUND you.

To this end, I have already shared more than a million words on this blog, without any paywall or user tracking, and am sharing the next million through a newsletter, also without any paywall.

The more direct support I get, the more I can continue to inform for free parents, teachers, decision makers, and everybody else who should know more stuff like this. You can support me with paid subscriptions to my newsletter, donations via PayPal (mfioretti@nexaima.net) or LiberaPay, or in any of the other ways listed here.THANKS for your support!