Flawed algorithms grading students told to cheat to get in college

We’re really reaching Peak College Bubble here…

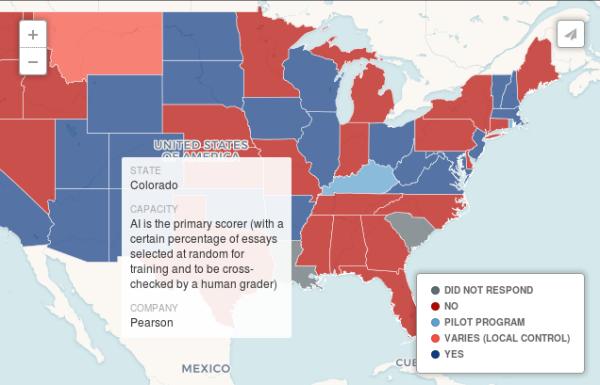

Every year, millions of american students take tests whose results decide, or at least influence, who will be able to go to the best colleges, or how much money will be available for schools and teachers.

In recent years, tests made “manually”, and then graded by human teachers have been increasingly replaced with computerized tests graded by software programs.

The reasons are obvious, and very compelling: big cost savings, and possibility to give immediate feedback to both students and school boards.

Fact is, an investigation by Motherboard has found that millions of essays are graded by flawed software.

The “flaws” have two main forms. One, already appeared in many other fields of the “Artificial Intelligence” (AI) business, is bias against certain demographic groups. The other is the ease with which some systems can be “be fooled by [machine-generated] nonsense essays with sophisticated vocabulary”.

A machine is only as good as its teacher

Essay-scoring software are trained to recognize which text patterns appear more frequently in essays that received lower or higher than average grades from human graders. They then predict what score a human would assign an essay, based on those patterns.

The problem is that this procedure preserves, and sometimes exacerbates, existing discrimination. As an hypothetical example, imagine a state where the majority of human graders dislikes (even unconsciously!) and consequently rates lower, a specifical style of writing that is statistically more frequent in, say, students from Greenland. Any software trained by looking at the work of those people, and the grades they gave, will discriminate students from Greenland just as unfairly. Besides, any software has a hard time to evaluate anything that cannot be measured objectively, like, you know, creativity.

Things are even more interesting when considering that it is very hard, even for experts, to understand why, exactly, an automatically trained AI algorithm did what it did, in order to fix it. And there is more.

Even gibberish can get you in college

Several years ago, a MIT team developed a Basic Automatic B.S. Essay Language (BABEL) Generator, that is a program that generates meaningless essays by patching together strings of sophisticated words. The result? The nonsense essays consistently received high, sometimes perfect, scores when run through several different scoring engines.

A useful system… if used by humans

Any grading software that promotes students who submit literally random bundles of text, or unfairly discriminates against others in unpredictable ways is not worth much, no matter how cheap and fast it is. Still, there is probably a place for such systems, as long as quality control, that is humans grading wide samples the same essays to spot problems, becomes much better than today. This is not the reason why I wrote this post, however.

Flawed grading algorithms, in a real, weird world

What Motherboard found is that, let’s say it again, flawed algorithms are giving wrong estimates of which people deserve higher grades and, therefore, more opportunities than others.

Cool, isn’t it? Not, of course. But it is even more, much more uncool when you put this fact side by side with the fact that many students are pushed to cheat on social media, exactly because not even (flawed) good grades are enough these days.

And it gets even more (not!) funny when you think that there are people suggesting that only people passing tests should be allowed to vote:

- “Don’t let ignorant people vote”

- “The right to vote should be restricted to those with knowledge”

- “Should the government weed out ignorant voters?"

Image sources: Motherboard article and the Babel Generator

Who writes this, why, and how to help

I am Marco Fioretti, tech writer and aspiring polymath doing human-digital research and popularization.

I do it because YOUR civil rights and the quality of YOUR life depend every year more on how software is used AROUND you.

To this end, I have already shared more than a million words on this blog, without any paywall or user tracking, and am sharing the next million through a newsletter, also without any paywall.

The more direct support I get, the more I can continue to inform for free parents, teachers, decision makers, and everybody else who should know more stuff like this. You can support me with paid subscriptions to my newsletter, donations via PayPal (mfioretti@nexaima.net) or LiberaPay, or in any of the other ways listed here.THANKS for your support!