Social media infer a lot and it's all YOUR fault

Inference plus facial recognition are BAD, but what makes them actually toxic is you.

inference, noun: “a guess that you make or an opinion that you form based on the [other] information that you have” and assume it’s true.

THANKS for telling the bad guys who and where **I** am

</em></u>

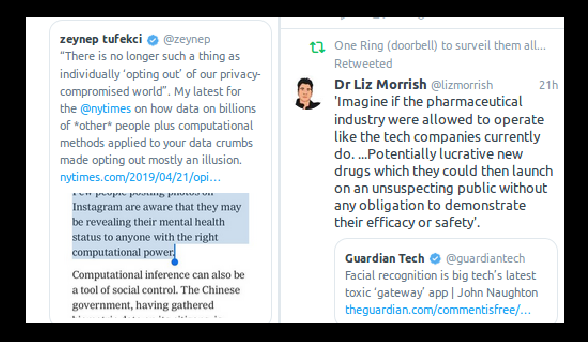

The first thing I saw this morning in my Twitter timeline were two back-to-back tweets on the same topic: inference by social media, its dangerousness, and how much of it is YOUR fault (where “YOUR” means “EVERY average social media user”). The following two paragraphs, entirely made of quotes from two articles by Zeynep Tufekci and John Naughton, which I strongly urge you to read!!!) summarize the situation.

YOU feed the (broken!) discrimination of your friends

People concerned about privacy often stay off social media, or if they’re on it, they post cautiously.

But they are wrong. Because of technological advances and the sheer amount of data now available about billions of OTHER people, computer algorithms… can now infer, with a sufficiently high degree of accuracy, a wide range of things about you that you may have never disclosed.

Unless [these tools] are properly regulated, we could be [soon] hired, fired, granted or denied insurance, accepted to or rejected from college, rented housing and extended or denied credit based on facts that are inferred about us.

This is worrisome enough when it involves correct inferences. But computational inference also often gets things wrong, in ways almost impossible to detect.

One troubling example of inference involves your phone number. Even if you have stayed off Facebook and other social media, your phone number is almost certainly in many other people’s contact lists on their phones. If they use Facebook (or Instagram or WhatsApp), they have been prompted to upload their contacts to help find their “friends,” which many people do.

Once your number surfaces in a few uploads, Facebook can place you in a social network, which helps it infer things about you since we tend to resemble the people in our social set.

Facial recognition closes the circle

THANKS for teaching the bad guys to recognize ME

</em></u>

Automatic facial recognition has come on in leaps and bounds as cameras, sensors and machine-learning software have improved and as the supply of training data (images from social media) has multiplied. We’ve now reached the point where it’s possible to capture images of people’s faces and identify them in real time.

[And the real problem is that] if this technology becomes normalised then in the end it will be everywhere; all human beings will essentially be machine-identifiable wherever they go. At that point corporations and governments will have a powerful tool for sorting and categorising populations.

(In other news: “US facial recognition will cover 97 percent of departing airline passengers within four years”)

This was written all over the place

None of this is news. Here are just a few proofs, but you can find hundreds of them online:

- “No Loan? Thank online data collection” (2013)

- “if you’re not paying for the product, YOUR FRIENDS are the product” (said in 2015 and 2018)

- Price of car insurance based on Facebook posts (2016)

- Who gives Facebook data about non-Facebook users? Their “friends”, of course (2018)

- Who explicitly tells Facebook which photos are more sensitive or embarrassing? You, of course

- Using phone numbers as online user names is unbelievably dumb (2018)

- Using WhatsApp is unbelievably rude on your friends who do NOT use it (2018)

But we all stick to the WRONG solutions

One particularly depressing side of all this mess is how politicians insist on pushing the wrong type of actions and directives when it would be both much simpler AND much more effective to regulate just a few, right things and promote the right kind of personal cloud computing.

Who writes this, why, and how to help

I am Marco Fioretti, tech writer and aspiring polymath doing human-digital research and popularization.

I do it because YOUR civil rights and the quality of YOUR life depend every year more on how software is used AROUND you.

To this end, I have already shared more than a million words on this blog, without any paywall or user tracking, and am sharing the next million through a newsletter, also without any paywall.

The more direct support I get, the more I can continue to inform for free parents, teachers, decision makers, and everybody else who should know more stuff like this. You can support me with paid subscriptions to my newsletter, donations via PayPal (mfioretti@nexaima.net) or LiberaPay, or in any of the other ways listed here.THANKS for your support!