Machine learning for security clearances... of a Snowden Generation?

What happens when you spend tons of money on sophisticated people-monitoring algorithms, but the people to monitor…

OK, let’s start from the beginning: I just read that in 2018 the US government announced a new security clearance program - including for individuals in civilian roles - which would run “continuous evaluations” of all applicants, thanks to machine learning technology.

What could possibly go wrong?

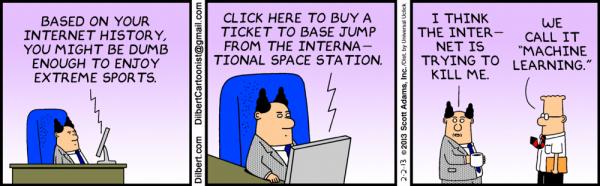

<u><em><strong>CAPTION:</strong>

<a href="https://dilbert.com/strip/2013-02-02" target="_blank">Click for source</a>

</em></u>

The article itself highlights the obvious risks of such a system “going off the rails”, but the really interesting questions here are:

- Would we ever know if the system is misbehaving?

- Above all: What if the system works as intended?.

At least in certain cases, we may never know the answer to the first question because, as the article says, certain systems “offer little to no insight as to how their highly accurate predictions are actually made”. But hey, we are only talking of national security, no big deal right?

So let’s focus on the second question. The article does correctly acknowledge my first thought when I read its title: “if the system works, it might actually generate deeper problems still”. Because this “system”, which is nothing else but a military version of the Chinese “social credit scoring” all we in the West love to hate, would:

- have “a very pronounced effect on the behavior of its subjects”

- compromise the privacy and agency of the very people tasked with upholding national security.

- be a nightmare for whistleblowers - even those who use internal processes - who could be flagged as potential risks

I have a feeling, however, that even this is not the most interesting “unintended consequence” of this program. What if it ends up unused, wasting lots of taxpayers money, simply because less and less people come to it every year?

Because, you know, right after “what if it works?”, the next thing that came to my mind is my thoughts about the CIA director “vs” millennials, back in 2017. In synthesis, that director complained that millennials have different instincts than the people who hired them and this cultural difference may be the reason why “millennials leak secrets”.

But the real reasons for such differences may be (see my 2017 post for the full version) that:

- It is the very system-wide [sociological problems of the 21st century] that “undermine the way the Western security state operates”. Because…

- “Generation Z will arrive [on the workplace] brutalized and atomized by three generations of diminished expectations and dog-eat-dog economic liberalism.”

- This “undermines any ability to create a meaningful republic”

- “[in such a world] where [to] recruit the jailers? And how do you ensure their loyalty?”

- Edward Snowden seems to be just a product, or a forerunner, of this state of things

In other words…

That’s the 21st CENTURY, baby. The CENTURY! And there’s nothing machine learning can do about it. Nothing!

That very cool machine learning system may end up as a waste of money because less and less people want to work (with enough “loyalty”, at least) for the organization that runs it. Then what?

Well, if the kind of citizens that states need to be surveillance states is vanishing, all those states may eventually do is to to become more open and inclusive. To become states, that is, that need less security clearances, not more machine learning.

Final exercise for the reader

Couple this potential lack of people interested in submitting to security clearance for national defense purposes with the apparent lack of people physically suitable for such tasks, and try to calculate the consequences:

Who writes this, why, and how to help

I am Marco Fioretti, tech writer and aspiring polymath doing human-digital research and popularization.

I do it because YOUR civil rights and the quality of YOUR life depend every year more on how software is used AROUND you.

To this end, I have already shared more than a million words on this blog, without any paywall or user tracking, and am sharing the next million through a newsletter, also without any paywall.

The more direct support I get, the more I can continue to inform for free parents, teachers, decision makers, and everybody else who should know more stuff like this. You can support me with paid subscriptions to my newsletter, donations via PayPal (mfioretti@nexaima.net) or LiberaPay, or in any of the other ways listed here.THANKS for your support!