Limits, and one big side effect, of granting personhood status to "robots"

Let’s play conspiracy theories for a moment: what is the REAL reason why the European Parliament is proposing to treat robots as human beings? Even better: WHICH robots should benefit of such a recognition? WHAT is a robot, anyway?

The EU Parliament proposal is described here. These are its most interesting passages of that article:

- “To be clear, the EU doesn’t want to imbue robots and AI with human rights, such as the right to vote, the right to life, or the right to own property. Nor is it wanting to recognize robots as self-conscious entities.”

- “Electronic personhood would turn each smart robot into a singular legal entity, each of whom would have to bear certain social responsibilities and obligations (exactly what these would be, we don’t yet know).”

- “Under this provision, liability would reside with the robot itself. We wouldn’t be able to throw a machine in jail, but we could require all smart bots to be insured as independent entities.”

WHICH robots? Why Robots?

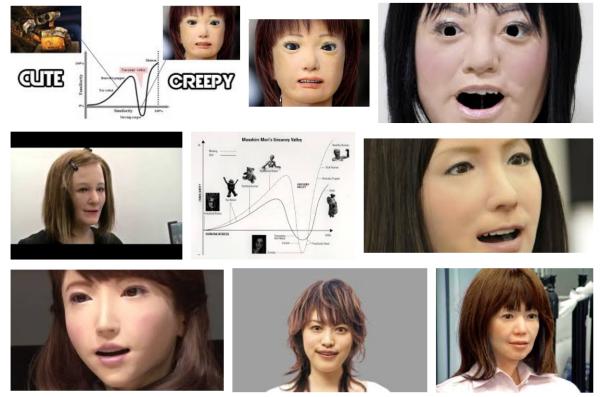

NOW is the time to discover (or IGNORE?) the Uncanny Valley!

</em></u>

Besides the fact that, as that article says “knowingly granting personhood status to entities that aren’t actually persons, we’re.. diminishing what it means to be a person”, that proposal seems to have a few limits.

First of all, which Robots? All kinds, or only those in, or beyond, the “uncanny valley”? Where do you draw the line between what is a robot and what is not?

Above all: why robots? Should any personhood be granted to anything digital, that something it should go to software, or Artificial Intelligence, if you will. It hard to see why it should be granted to their mere interfaces, that is to objects which would be paperweights, if they had no software controlling them.

See what I wrote about “robotics surgeons”: robots are nothing but software inside a box that more or less looks like a human body, or some part of it.

What if a robot gets hacked, that is its software is replaced by another one?

Seriously: let’s talk software, artificial intelligence, machine learning… Let’s talk of responsibility of the humans who make, configure or crack THOSE things. But not of “personhood of robots”. Giving personhood to robots risks to be like giving personhood to revolvers, or food processors.

Next issue: which law should regulate and eventually punish, these “persons”? The software that controls a robot may be half the planet away from it. And how do you punish something immaterial and infinitely copiable? This comment to the Gizmodo article properly describes this side of the question:

“I’m sorry that the neural network that I made to day-trade the stock market violated numerous trading rules and drove several companies into bankruptcy. Feel free to delete the program, or imprison it on a thumb drive. I’ll write a new one tomorrow, and I’m sure that it will be law-abiding.”

And now, the conspiracy theory. If robots can be people, then…

Let’s now go in conspiracy theory mode, just for fun. The article already quoted points out that “this measure would be similar to corporate personhood: an agreed upon legal fiction designed to smooth business processes by giving corporations rights typically afforded to actual persons, namely humans.”

And this is where the “conspiracy” lies.

Whether one means it or not, granting, or seriously debating anyway, the “personhood” of something artificial, immaterial, ubiquitous, immortal.. legitimates and perpetuates even more the idea that corporations, which are just as artificial, immaterial, ubiquitous, immortal as software, deserve all the personhood they currently have, if not more. Even if there are reasons to question that position. Maybe the right starting point to approach software (again: not “robots”) personhood is to analyse and redefine what the same “legal fiction” has yeld with corporations. Here is some food for thought, just to get started:

- “granting personhood to the machines and corporations that strip it away from human beings?"

- “EU Commission still casts usual corporate suspects for the leading roles”

- “Why corporations should have their special status reviewed”

- “it is truly ironic that the death penalty and hell are reserved only to natural persons."

- “Corporations Have More Constitutional Rights Than Actual People”

Who writes this, why, and how to help

I am Marco Fioretti, tech writer and aspiring polymath doing human-digital research and popularization.

I do it because YOUR civil rights and the quality of YOUR life depend every year more on how software is used AROUND you.

To this end, I have already shared more than a million words on this blog, without any paywall or user tracking, and am sharing the next million through a newsletter, also without any paywall.

The more direct support I get, the more I can continue to inform for free parents, teachers, decision makers, and everybody else who should know more stuff like this. You can support me with paid subscriptions to my newsletter, donations via PayPal (mfioretti@nexaima.net) or LiberaPay, or in any of the other ways listed here.THANKS for your support!