All Posts

Autonomous cars will kill the car industry

Stay away from their stocks. Seriously.

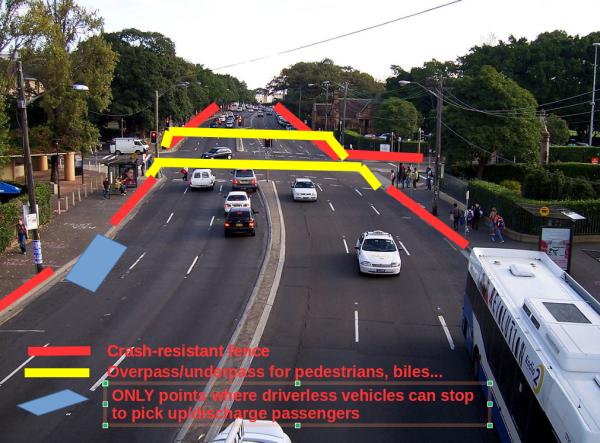

This is what I mean by "treating driverless cars like trains"

Six months ago, I wrote that “the REAL name of self-driving cars must become something like

The REAL name, and value, of self-driving cars

The first issue with self-driving cars may be in how you CALL them.